Unreliable Randomness: Why LLMs Struggle with Statistical Sampling and Its Impact on Enterprise AI

Explore how Large Language Models (LLMs) fundamentally struggle with accurate statistical sampling, impacting critical business applications like synthetic data and content generation. Learn why external tools are essential for reliable AI.

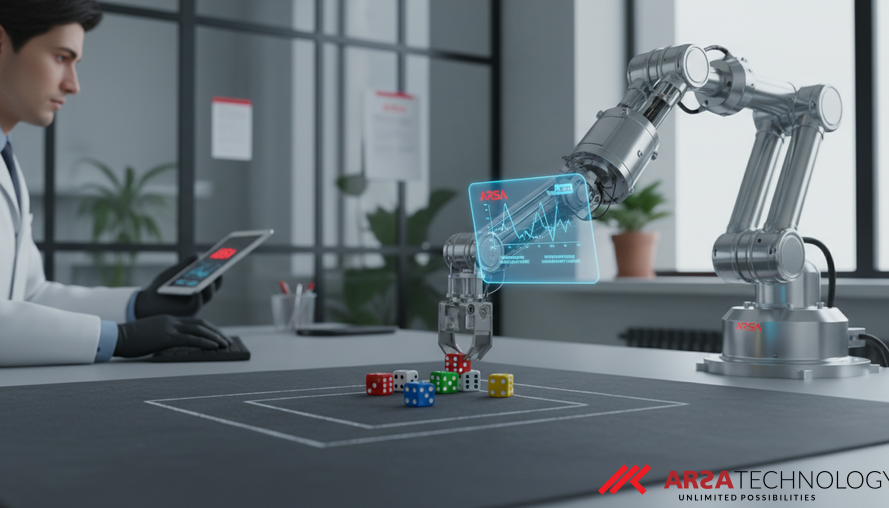

The Hidden Flaw: Why Large Language Models Are "Bad Dice Players"

As Artificial Intelligence (AI) and the Internet of Things (IoT) continue to revolutionize global industries, Large Language Models (LLMs) are evolving rapidly beyond simple chatbots. They are becoming critical components in sophisticated operational pipelines, influencing everything from automated content generation to complex simulations and synthetic data creation. With this expanded role comes a crucial demand: the ability to generate truly random samples from specified probability distributions. This isn't just a theoretical concept for academics; it's a fundamental requirement for applications demanding statistical integrity and fairness. However, recent research reveals a significant limitation: current LLMs are surprisingly poor at generating random numbers, acting more like "bad dice players" with inherent biases.

Benchmarking LLM Sampling Capabilities: A Deeper Look

A comprehensive study recently audited the native probabilistic sampling abilities of 11 frontier LLMs across 15 different statistical distributions. To understand the root causes of potential failures, researchers employed a dual-protocol design: "Batch Generation," where an LLM produces 1,000 samples within a single response, and "Independent Requests," where each of the 1,000 samples is generated through separate, stateless calls. The findings were stark and highlighted a critical weakness in how LLMs handle true randomness.

The study revealed a sharp disparity between the two methods. In batch generation, where the model produced multiple samples in one go, only a modest 13% of distributions passed statistical validity tests. This indicates a significant struggle, but the situation was even worse with independent requests. In this protocol, a staggering 10 out of 11 models failed to pass any of the distributions. This dramatic collapse suggests that LLMs lack a functional internal mechanism for genuine independent probabilistic sampling. They might be able to describe randomness linguistically, but they struggle to produce it reliably.

Beyond Theory: Real-World Business Impacts of Skewed Sampling

The inability of LLMs to generate statistically faithful random samples is not merely an academic curiosity; it has tangible and detrimental consequences for critical business applications. For enterprises relying on AI for decision-making, content creation, or data generation, these biases can lead to skewed results, unfair outcomes, and ultimately, eroded trust in AI systems. The study demonstrated this propagation of failures in several downstream tasks.

One key area is in educational content creation, specifically Multiple Choice Question (MCQ) generation. While instructors aim for correct answers to be uniformly distributed across positions (e.g., A, B, C, D) to prevent test-takers from exploiting patterns, LLMs were found to exhibit strong positional biases. This means an LLM might systematically favor option 'C' as the correct answer, compromising the integrity of educational assessments. Similarly, in advanced applications like text-to-image prompt synthesis—where LLMs are used to generate diverse prompts for creating synthetic image datasets—sampling failures lead to systematic violations of demographic targets. For instance, if a company wants to generate images with a balanced representation of different genders or ethnicities, an LLM might inadvertently create a dataset heavily skewed towards one demographic, undermining efforts towards fairness and inclusivity. For businesses seeking diverse content or analytics, such as those leveraging tools like ARSA AI BOX - DOOH Audience Meter to understand audience demographics, having unreliable data at the generation stage could lead to flawed marketing strategies. ARSA's AI Video Analytics, for example, prioritizes robust data gathering to avoid such foundational biases.

Unpacking LLM Limitations: Complexity and Scale

The research further highlighted that LLM sampling fidelity degrades significantly with increased distributional complexity. Simple distributions, like uniform or Gaussian, were challenging enough, but more intricate ones (e.g., Cauchy, Student’s t, Chi-Square) proved even more problematic for the models. This suggests a fundamental limitation in the LLM's ability to internalize and reproduce the mathematical nuances of diverse statistical patterns.

Moreover, the study found that as the requested sampling horizon (N), or the number of samples, increased, the LLM’s adherence to the specified distribution worsened. This indicates that their ability to "simulate" randomness isn't robust or scalable. Essentially, LLMs don't possess a genuine "world model" for probabilities; instead, they learn to generate text sequences that describe randomness, without acquiring the underlying functional competence to produce it accurately. This insight is crucial for companies developing or deploying AI solutions, emphasizing that foundational reliability often comes from dedicated, specialized AI.

Ensuring Statistical Guarantees: The Role of External Tools and Specialized AI

Given these findings, it's clear that for applications requiring statistical rigor and true randomness, enterprises cannot solely rely on the native sampling capabilities of current LLMs. The mainstream practice of prompting LLMs to generate Python code that then calls robust external numerical libraries (like `numpy.random`) is not a workaround but a necessity. This approach ensures that the statistical guarantees required for critical applications are met.

ARSA Technology has been experienced since 2018 in developing AI and IoT solutions that prioritize accuracy, reliability, and measurable impact. Our approach focuses on deploying specialized AI components, either as standalone ARSA AI API services or integrated into robust hardware like the AI Box Series, ensuring precise data processing and decision-making. When stochasticity is a requirement, robust, dedicated algorithms are essential, offering the reliability that LLMs currently lack. This focus means that critical AI functions, such as those for AI Video Analytics, are built on foundations that deliver consistent, verifiable performance, reducing risks and boosting confidence in AI-powered operations.

Transforming Your Business with Reliable AI

While LLMs are powerful tools for natural language understanding and generation, their limitations in statistical sampling highlight the importance of carefully selecting and integrating AI components for specific tasks. For enterprises seeking to harness AI for complex applications that demand statistical accuracy, relying on specialized, proven solutions is paramount.

Ready to explore how robust, reliable AI solutions can drive your business forward? We invite you to discover ARSA's comprehensive suite of AI and IoT solutions, designed to deliver measurable results and empower your digital transformation journey. For a free consultation to discuss your specific industry challenges and tailor a solution, contact ARSA today.